EyePhone

This blog aims to record some relative information about EYEPHONE.

What’s EyePhone

EyePhone is a personal built project, aims to help blind people with their cellphone to detect the obstacles on the road. EyePhone focus on the common road scenes in the city. It has a quite simple use - “Hold the phone, whenever there is a obstacle in the road, remind the user.”

Obstacles could have following categrories: Stairs, Bicycle, Street Lights, Cars, Other People, and other obstacles.

There are some basic idea of how to build this babe:)

Updated at 2018.5.2

Pure empirical method of CNN: We trust our CNN methods, and offer tons of data (input: images output: obstacles detected y/n) to train it, and later given specific input it could make a decision.

Combine Machine Learning with Prior Knowledge: We have some prior knowledge in the obstacle detection, like when something is far it would be small in our sight, while close make it bigger in the sights.

Combine CNN with RNN: This is a very promising idea while I have no idea of where to start now. I found one interesting fact occasionally, that in a badly scaled picture, sometimes it is hard even for human to find what’s in the picture. While it all the pictures are played fast, it would be easily found! (Imagine watching a badly scaled .gif picture!). So maybe we could combine CNN and RNN and contribute to a better performance.

Updated at 2018.5.7 - Start from detection, and build the distance measure on the experience of object detection

Analysis based on the high precision images: use F R-CNN to analysis the position of the obstalces.

Analysis based on the low precision images: use TPN for the temporal information.

Generally speaking, according to the previous papers, it takes more or less 40 seconds to detect some obstacles in the picture. While here it is more promising to use the method with a low precision image and continous temporal information. It we could combine the knowledge of the conteinous temporal information into some sharing information, it would require a far more less computation.

Updated at 2018.5.10 - Maybe a detection will be good enought to get the Regions of Interests in the picture, and we could only train it to get the ability of distance detection.

- Analysis based on SS: use machine learning methods train the model the ability of distance detection, hence there are two conditions we have to reach first. First, we have to make sure Select Search method is good enough to extract all the important information in the picture. Second, this method would have the advantages that it may need less information to train, this scale is handlable in a personal computer, while we have to make sure that we only analysis the RoI of greater weights. (Could we use a neural method to guide the selective search like we do in the AlphaZero?)

Papers

In this part, I would noted some useful information I found in some papers and their inspiration on this project.

Paper: Object Detection from Video Tubelets with Convolutional Neural Networks

Click here for the orginal text

INTRO

Method1: Detect obstacles frame by frame

Method2: Combine Method1 with object tracking

The framework consists of two main modules: 1)a tubelet proposal module that combines object detection and object tracking for tubelet object proposal; 2) a tubelet classification and re-scoring module that performs spatial max-pooling for robust box scoring and temporal convolution for incorporating temporal consistency.

METHOD

Objects in videos show temporal and spatial consistency. The same object in adjacent frames has similar appearances and locations. Using either (object detectors or object trackers) existing object detection methods or object tracking methods alone cannot effectively solve the VID problem.

The combined model has the discriminative ability from object detectors and the temporal consistency from object trackers. It has 2 following

- The tubelet proposal module has 3 major steps:

1) image object proposal (每一帧都进行分析,通过一个算法,将总共可能出现的object的种类从一个很大的类缩减到一个小范围)

2) object proposal scoring (对于每个proposal进行分析,根据他们的confidence从几百个proposal进一步削减到几十个proposal)

3) high-confidence object tracking (对于每一个高confidence的proposal,我们以某一帧为anchor,向前和向后进行track,为了防止track的物体漂移到别的物体或者背景上,当confidence低于某个threshold后停止tracking).

- Tubelet classification and rescoring has 2 steps:

1) Tubelet box perturbation and max-pooling (使用这种方法将多个tubelet box进行pooling得到某一帧的boxes)

2) Temporal convolution and re-scoring (建立一个时序的temporal convolution network 每一个输入x为每一个tubelet box在不同时间【连续输入】的一个三维向量(detection score,tracking score,anchor offset)得到该tubelet box的prediction scores).

Paper: Object Detection in Videos with Tubelet Proposal Networks

Click here for the orginal text

INTRO

The contribution of this paper is that it propose a new deep learning framework that combines tubelet proposal generation and temporal classification with visual-temporal features. An efficient tubelet proposal generation algorithm is developed to generate tubelet proposals that capture spatiotemporal locations of objects in videos. A temporal LSTM model is adopted for classifying tubelet proposals with both visual features and temporal features. Such high-level temporal features are generally ignored by existing detection systems but are crucial for object detection in videos.

Tubelet proposal networks TPN

- Preliminaries on ROI-pooling for regression

FAST R-CNN/ROI POOLING

The object of this part is to use ROI-pooling finding the for a t, the corresponding bi=(xi,yi,wi,ti) denoting the ith box proposal(what could it be!) at time t, where x, y, w and h represent the two coordinates of the box center, width and height of the box proposal.

- Static object proposals as spatial anchors

Let bi1 denote a static proposal of interest at time t =1. Particularly, to generate a tubelet proposal starting at bi1, visual features within the w-frame temporal window from frame 1 to w are pooled at the same location bi1 as ri1,ri2,…,riw in order to generate the tubelet proposal. We call bi1 a “spatial anchor”.

The reason why we are able to pool multi-frame features from the same spatial location for tubelet proposals is that CNN feature maps at higher layers usually have large receptive fields. Even if visual features are pooled from a small bounding box, its visual context is far greater than the bounding box itself. Pooling at the same box locations across time is therefore capable of capturing large possible movements of objects.

- Supervisions for tubelet proposal generation

Our goal is to generate tubelet proposals that have high object recall rates at each frame and can accurately track objects. Based on the pooled visual features ri1,ri2,…,riw at box locations bit, we train a regression network R(·) that effectively estimates the relative movements w.r.t. the spatial anchors.

Once we obtain such relative movements, the actual box locations of the tubelet could be easily inferred. Our key assumption is that the tubelet proposals should have consistent movement patterns with the ground-truth objects.

- Initialization for multi-frame regression layer

Paper: Fast R-CNN

Click here for the orginal text

INTRO

Object detection is a more challenging task compared with image classification. It has 2 primary challenges:

1) numerous candidate object locations (often called “proposals”) must be processed.

2) these candidates provide only rough localization that must be refined to achieve precise localization

While in this paper, based on the work of R-CNN, faster R-CNN has a better performance while at the same time decrease the amout of calculation in the whole process.

R-CNN Drawback

1) Training is a multi-stage pipeline

2) Training is expensive in space and time

3) Object detection is slow

SPPnet(Spatial pyramid pooling networks) soleve the problem that R-CNN does not have sharing computation, it accelerates R-CNN.

FINE-TUNING

Faster R-CNN advantages

- Higher detection quality (mAP) than R-CNN, SPPnet

- Training is single-stage, using a multi-task loss

- Training can update all network layers

- No disk storage is required for feature caching

Faster R-CNN architecture

A Fast R-CNN network takes as input an entire image and a set of object proposals. The network first processes the whole image with several convolutional (conv) and max pooling layers to produce a conv feature map. Then, for each object proposal a region of interest (RoI) pooling layer extracts a fixed-length feature vector from the feature map. Each feature vector is fed into a sequence of fully connected (fc) layers that finally branch into two sibling output layers: one that produces softmax probability estimates over K object classes plus a catch-all “background” class and another layer that outputs four real-valued numbers for each of the K object classes. Each set of 4 values encodes refined bounding-box positions for one of the K classes

对于一个Faster R-CNN来说,输入为输入图片和相关区域(RoI),输入图片通过一个CNN进行处理得到一个卷积特征图(conv feature map),而RoI在这张feature map上找到对应的区域,每个相关区域可以被映射到一个固定长度的特征向量(feature vector),这个feature vector会进入下一步的处理(一个fully connected layer),得到两个output:

1) softmax probability: K个object分类以及background class的可能性

2) per-class bounding box regression offsets: 对每个object class的bounding box位置预测(一个4维向量)

A Fast R-CNN network has two sibling output layers. The first outputs a discrete probability distribution (per RoI), p = (p0, . . . , pK), over K + 1 categories. As usual, p is computed by a softmax over the K+1 outputs of a fully connected layer. The second sibling layer outputs bounding-box regression offsets, tk(tkx,tky,tkw,tkh) for each of the K object classes, indexed by k.tk specifies a scale-invariant translation and log-space height/width shift relative to an object proposal.

Paper: R-CNN: Object detection

Click here for the orginal text

CSDN Relative information

INTRO

TWO KEY INSIGHTS:

1) one can apply high-capacity convolutional neural networks (CNNs) to bottom-up region proposals in order to localize and segment objects

2) when labeled training data is scarce, supervised pre-training for an auxiliary task, followed by domain-specific fine-tuning, yields a significant performance boost.

Since in this thesis region proposals is combined with CNNs, this method is known as method R-CNN: Regions with CNN features.

TWO CHALLENGE AND THE CONTRIBUTIONS:

1) 速度: 经典的目标检测算法使用滑动窗法依次判断所有可能的区域。本文则预先提取一系列较可能是物体的候选区域,之后仅在这些候选区域上提取特征,进行判断。

2) 训练集(the labeled data is scarce and the amount currently available is insufficient for training a large CNN.): 经典的目标检测算法在区域中提取人工设定的特征(Haar,HOG)。本文则需要训练深度网络进行特征提取。可供使用的有两个数据库: 一个较大的识别库(ImageNet ILSVC 2012):标定每张图片中物体的类别。一千万图像,1000类。 一个较小的检测库(PASCAL VOC 2007):标定每张图片中,物体的类别和位置。一万图像,20类。本文使用识别库进行预训练,而后用检测库调优参数。最后在检测库上评测。

Combine the object detection and image classification together

Object detection is what is needed in EyePhone

Objection Detection

Our object detection system consists of three modules.

The first generates category-independent region proposals. These proposals define the set of candidate detections available to our detector.

The second module is a large convolutional neural network that extracts a fixed-length feature vector from each region.

The third module is a set of classspecific linear SVMs.

使用Seletive Search找到region proposals,然后对于每一个region,把他转化成一个固定大小的CNN输入。

对于一个test sample,通过Selective Search得到约2000个region proposals,然后每一个region proposal通过forwad propagation通过一个CNN,在得到每一个region的score之后,我们基于贪心算法,当一个region和另一个region重合,并且那个新的region的score高于某一个threshold,那么选择那个新的region代替两个region。

Paper: Selective Search

Click here for the orginal text

Click here for the project

由于该篇文章和本工程的相关性,该篇论文的注释将会用中文进行。

Selective search is a algorithm in object detection! (not recognition)

INTRO 分割与穷举

在图片的物体识别领域,我们发现很难用一种统一的方法对所有的图片进行分类,我们需要考虑纹理,颜色以及这个物体的真实属性(一个轮子是单独的么?还是说这个轮子属于一辆车?),虽然在一张图片中往往其中一个就可以给出一个分类或者探测,但是在宏观上我们需要将多个元素进行考虑,而且还有一个问题是,往往我们用分割的方法(a unique partitioning of the image through a generic algorithm, where there is one part for all object silhouettes in the image.)很难得到一个正确的结论,在很多图片中,它的结构是intrinsic iherachical的,比如一个桌子上有若干个杯子,杯子里放着乒乓球或者羽毛球,我们需要考虑的是一个hierachical的模型。

通常情况下,我们在考虑object recognition的时候往往需要先进行object detection,然而在这篇论文中使用了另一种思路的方法,to do localisation through the identification of an object,比如以下这个情景,在一张图片中出现了一个穿西装上衣的人,模型可以识别在西装上面有一张脸,然而为了识别整体的人的概念,我们需要模型对于这个概念有了解(prior recognition)。

一个解决方案是穷举法(对于每一个box boundary,它可能包含人/自行车/航空母舰嘛?),显而易见这种方法几乎是computatianal impossible,在这篇文章中提出的selective search的方法结合了segmentation和exhausive search,通过Bottom up segmentation获取图片的结构和每个object的位置【生成对象位置】,通过exhausive search捕捉每一个可能的object的位置信息【捕捉可能的对象的位置】。

Related Work

- Exhaustive Search

As an object can be located at any position and scale in the image, it is natural to search everywhere [8, 16, 36]. However, the visual search space is huge, making an exhaustive search computationally expensive. This imposes constraints on the evaluation cost per location and/or the number of locations considered. Hence most of these sliding window techniques use a coarse search grid and fixed aspect ratios, using weak classifiers and economic image features such as HOG.

While still, HIGH COST! EVEN WITH SOME IMPROVEMENTS, A EXHAUSTIVE SEARCH MAY STILL VISIT OVER 100,000 WINDOWS PER IMAGE.

Instead of a blind exhaustive search or a branch and bound search, we propose selective search. We use the underlying image structure to generate object locations. In contrast to the discussed methods, this yields a completely class-independent set of locations and generating less locations, which means saving a lot of computer power.

- Segmentation

In the previous segmentation research, there are some approaches by segmenting and recognizing objects in parts. They first generate a set of part hypotheses using a grouping method based on Arbelaez. Each part hypothesis is described by both appearance and shape features. Then, an object is recognized and carefully delineated by using its parts, achieving good results for shape recognition.

In their work, the segmentation is hierarchical and yields segments at all scales. However, they use a single grouping strategy whose power of discovering parts or objects is left unevaluated. In this work, we use multiple complementary strategies to deal with as many image conditions as possible.

Selective Search

the selective search should fulfill 3 considerations

捕获全部scale上的Objects:因为一个object可能在一张图片上的任意scale出现,甚至有些objects可能会具有模糊的边界。【通过using a hierachical algorithm解决】

多样化:不存在一种最好的策略将不同的region进行group。每个区域可能通过颜色,纹理等等形成一个object,所以与其采用一种在大部分情况下都行得通的单一的策略,我们想找到一系列策略来对应全部的情形。

易于计算

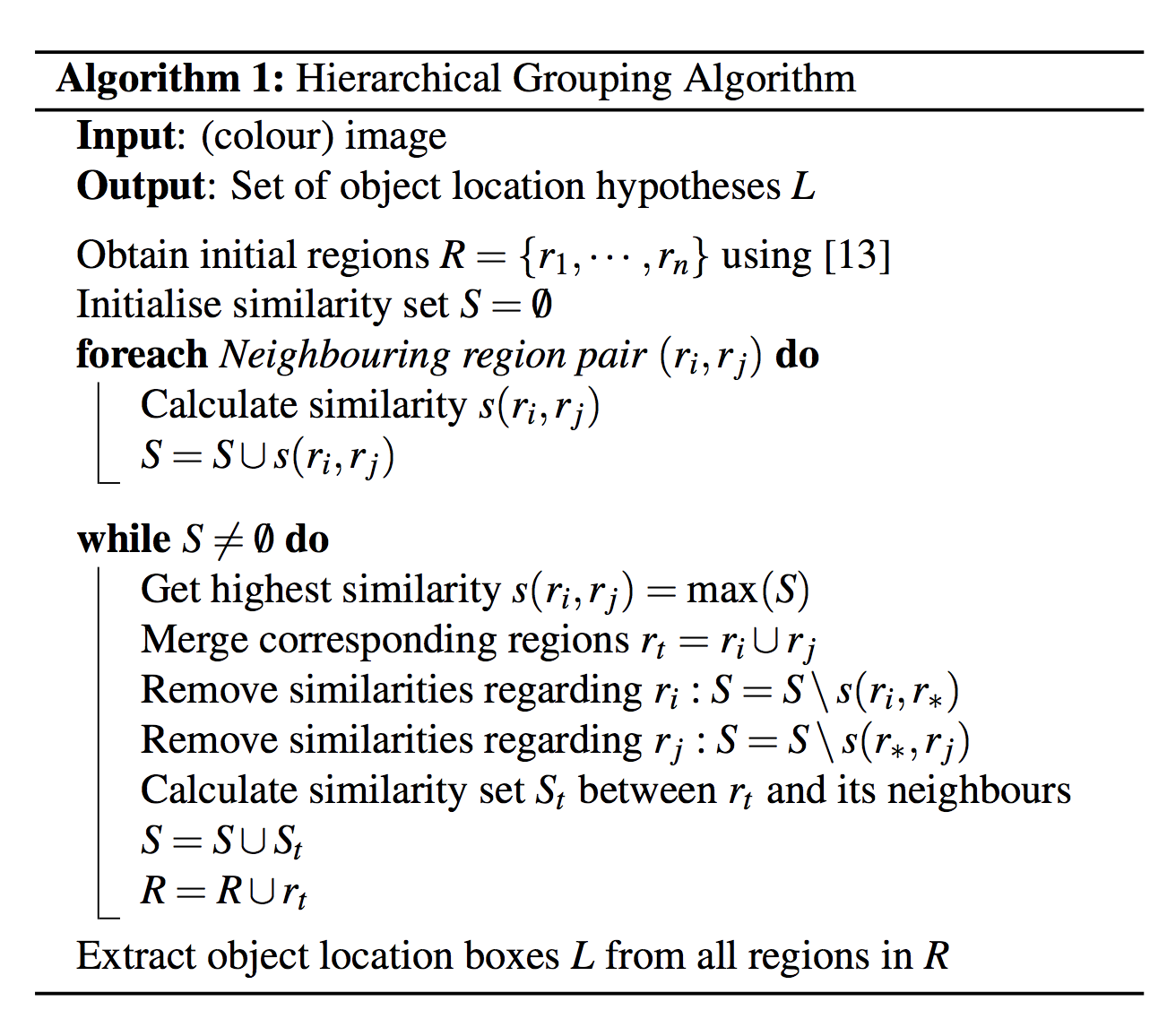

- Selective Search by Hierarchical Grouping

Bottom-up grouping is a popular approach to segmentation, hence we adapt it for selective search. This is a hierarchicalmethods, which means that we could naturally generate locations at all scales by continueing the grouping process until the whole image becomes a single region.

Bottom-up grouping is a popular approach to segmentation, we use this to get the initial regions

输入为一张照片,首先通过the fast method of Felzenszwalb and Huttenlocher创建initial regions R,然后我们使用贪心算法迭代的将regions组合起来,具体的过程为首先,【建立一个空集合S,首先对于每一对region pair,我们计算similarity,然后将他们的相似性放在集合S中】,然后【从S中取出最高的相似性的region pair,将两个融合成为新的region,并且移除与久的region有关的相似性并且计算新的region和它的周围的部分的相似性】,重复第二个步直到S成为空集。

在计算相似性的时候,我们必须保证新的r的特章必须可以通过原来的r计算得到而不需要回到pixel level重新计算。

- Diversification Strategies

Create a set of complementary strategies whose locations are combined afterwards. Some popular strategies are (1) by using a variety of colour spaces with different invariance properties, (2) by using different similarity measures sij, and (3) by varying our starting regions.

Complementary Colour Spaces

Complementary Similarity Measures(scolour,stexture,ssize,sfill)

Complemenetart Starting Regions

- Combining Locations

Felzenswalb Algorithm in image segmentation

Click here for the original paper text

Click here for the Chinese comments

本质上这种算法提供了图像分割的一种非AI的解决方法,对于图中的每一个像素进行考虑,设定超参数约束分割的granularity,然后得到一个分割后的图像,该算法的核心是图像中的颜色分布,所以就导致在其眼中分布是一块一块的,不能对一个对象进行有效的分割和识别。

Another idea for EyePhone

Maybe we could choose the result of selective search as the input for the detection of some certain objects like Bicycle/Stairs/Trees or other Vertical Cylindrical Objects/Horizontal Cylindrical Objects/Box/

Here’s some further information about this modification. Now it could use selective search to output some potential areas for the object detection. We have two things to do:

Object detection: We have to detect the objects in the area, so we have two problems to solve here, first thing is that we are going to extract all the usefull information from the image. The SS method works quite well when we are trying to find the objects, and we could use BaiduCloud Recognition API to recognize the objects.

Distance calculation: The problem left is that we are going to set a set of principles for the model, to calculate the distance of the objects between the user and the objects. In the beginning I put some efforts on help the model to get the idea of building the sense of objects and space. While this requires tons of calculations which is not possible for the individual developers. Hence we have to take the plan B and set the rules by hand (of course this rule could be learned by the model as well. It only depends on whether we have enough trainning sets)